K8s系列 - kube-proxy如何处理流量的转发

在Kubernetes中,Service是一个L4(TCP/UDP/SCTP)负载均衡器,它使用目标网络地址转换(DNAT)来将入站流量重定向到后端pod。重定向由kube-proxy执行,它位于每个节点上。

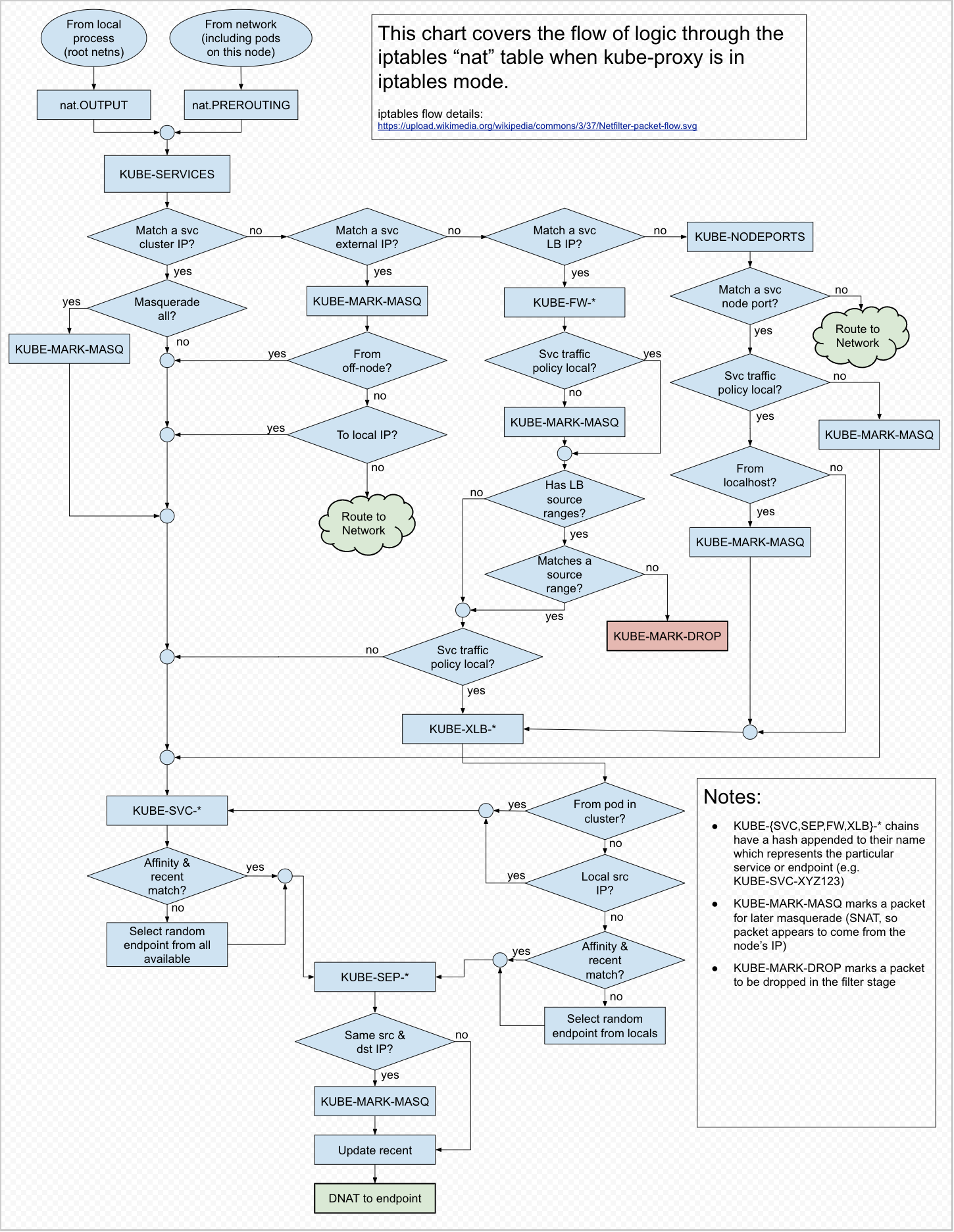

这里将介绍在iptables模式下,kube-proxy的工作机制

kube-proxy如何处理流量的转发

kube-proxy通过监视kube-apiserver中Service和Endpoints对象的添加和删除来触发路由规则的配置,目前支持三种不同的操作模式:

-

user space (没用过)

- 不常用。在这种模式下,对于每个Service,它在本地节点上打开一个端口(随机选择)。对该“代理端口”的任何连接都被代理到Service的一个后端Pod

-

iptables (default)

- 基于Netfilter构建。对于每个Service,它安装iptables规则,捕获流向Service的clusterIP和端口的流量,并将该流量重定向到Service的后端集之一。对于每个Endpoint对象,它安装iptables规则来选择一个后端Pod。这是大多数平台的默认模式。

-

ipvs

- 这种模式调用netlink接口根据需要创建IPVS规则,并定期将IPVS规则与Kubernetes Services和Endpoints进行同步。它要求Linux内核加载了IPVS模块

externalTrafficPolicy

-

该配置表示该Service是否希望将外部流量路由到节点本地或整个集群范围的endpoints。有两个可用选项:Cluster(默认)和Local

-

cluster

- 发送到类型为NodePort的服务数据包会通过节点的IP进行SNAT

- 如果外部数据包被路由到没有保留pod的节点,代理将其转发到另一主机上的pod。这可能会导致额外的跳转

- Kubernetes在集群内执行负载均衡,这意味着在进行负载均衡时将考虑到pod的数量,因此它具有良好的整体负载

-

local

- 仅在NodePort服务上可用。

- 数据包既不对集群内流量也不对外部流量进行SNAT

- 当数据包到达一个没有保留pod的节点时,它会被丢弃,从而避免了节点之间的额外跳转

- Kubernetes在节点内执行负载均衡,这意味着代理仅将负载分配到本地节点上的pod。解决不平衡问题取决于外部负载均衡器

chains of iptables

在创建Service或Endpoint对象时,会编写多个iptables链,用于在pod和服务之间执行各种过滤和NAT

- KUBE-SERVICES 服务数据包的入口点。其功能是匹配"目标IP":端口,并将数据包分派到相应的KUBE-SVC-*链

- KUBE-SVC- 充当负载均衡器,将数据包分发到KUBE-SEP-链。KUBE-SEP-的数量等于服务后面的端点数量。要选择哪个KUBE-SEP-是随机确定的

- KUBE-SEP-* 代表一个服务端点。它简单地执行DNAT,将服务的IP:端口替换为pod的端点IP:端口

- KUBE-MARK-MASQ 为外部网络中源自于服务的数据包添加一个Netfilter标记。带有此标记的数据包将在POSTROUTING规则中进行修改,使用源网络地址转换(SNAT),其源IP地址为节点的IP地址

- KUBE-MARK-DROP 为不具有目标NAT启用的数据包添加一个Netfilter标记。这些数据包将在KUBE-FIREWALL链中被丢弃

- KUBE-EXT-* 通常涉及源地址转换(SNAT)和目标地址转换(DNAT),以确保外部流量正确路由到 Kubernetes 集群中的服务

- KUBE-NODEPORTS 在部署为NodePort和LoadBalancer类型的服务时,会出现这个链。通过这个链,外部来源可以通过节点端口访问服务。它匹配节点端口,并将数据包分发到相应的KUBE-SVC-链(externalTrafficPolicy: Cluster)或KUBE-XLB-链(externalTrafficPolicy: Local)

- KUBE-XLB-* 在将externalTrafficPolicy设置为Local时,此链起作用。有了这个链的设置,如果一个节点没有相关的端点保留,数据包将被丢弃

Workflow of kube-proxy iptables

实践

这里以NodePort和ClusterIP为例

clusterIP

通过不同的配置,接下来我们将讨论5种类型的ClusterIP服务:

- normal:最小的服务定义,具有可用的pod后端,并且不是headless服务

- 会话亲和性

- 外部IP

- no endpoints

- headless服务

Service yaml:

apiVersion: v1

kind: Service

metadata:

name: echo

spec:

ports:

- port: 6711

targetPort: 8080

selector:

app: echo

kubectl apply 👆的文件

kubectl run -it --rm --restart=Never debug --image=nicolaka/netshoot:latest sh

nslookup echo

~ # nslookup echo

Server: 10.43.0.10

Address: 10.43.0.10#53

Name: echo.default.svc.cluster.local

Address: 10.43.91.128'

在k3s上,查看svc,endpoint

[ec2-user@ip-172-31-42-23 k3s]$ sudo kubectl get svc,ep echo

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/echo ClusterIP 10.43.91.128 <none> 6711/TCP 8m32s

NAME ENDPOINTS AGE

endpoints/echo 10.42.0.10:8080,10.42.0.11:8080,10.42.0.9:8080 8m32s

三个endpoint👆

查看下iptables的设置

[ec2-user@ip-172-31-42-23 k3s]$ sudo iptables -t nat -S | grep KUBE-SERVICES

-N KUBE-SERVICES

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A KUBE-SERVICES -d 10.43.214.178/32 -p tcp -m comment --comment "kube-system/metrics-server:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-Z4ANX4WAEWEBLCTM

-A KUBE-SERVICES -d 10.43.24.120/32 -p tcp -m comment --comment "kube-system/traefik:web cluster IP" -m tcp --dport 80 -j KUBE-SVC-UQMCRMJZLI3FTLDP

-A KUBE-SERVICES -d 172.31.42.23/32 -p tcp -m comment --comment "kube-system/traefik:web loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-UQMCRMJZLI3FTLDP

-A KUBE-SERVICES -d 10.43.24.120/32 -p tcp -m comment --comment "kube-system/traefik:websecure cluster IP" -m tcp --dport 443 -j KUBE-SVC-CVG3OEGEH7H5P3HQ

-A KUBE-SERVICES -d 172.31.42.23/32 -p tcp -m comment --comment "kube-system/traefik:websecure loadbalancer IP" -m tcp --dport 443 -j KUBE-EXT-CVG3OEGEH7H5P3HQ

-A KUBE-SERVICES -d 10.43.91.128/32 -p tcp -m comment --comment "default/echo cluster IP" -m tcp --dport 6711 -j KUBE-SVC-HV6DMF63W6MGLRDE

-A KUBE-SERVICES -d 10.43.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -d 10.43.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.43.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.43.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

[ec2-user@ip-172-31-42-23 k3s]$ sudo iptables -t nat -L KUBE-SERVICES | grep echo

KUBE-SVC-HV6DMF63W6MGLRDE tcp -- anywhere ip-10-43-91-128.ap-southeast-1.compute.internal /* default/echo cluster IP */ tcp dpt:6711

[ec2-user@ip-172-31-42-23 k3s]$ sudo iptables -t nat -L KUBE-SVC-HV6DMF63W6MGLRDE

Chain KUBE-SVC-HV6DMF63W6MGLRDE (1 references)

target prot opt source destination

KUBE-MARK-MASQ tcp -- !ip-10-42-0-0.ap-southeast-1.compute.internal/16 ip-10-43-91-128.ap-southeast-1.compute.internal /* default/echo cluster IP */ tcp dpt:6711

KUBE-SEP-QKHRHCLFVYAPNTMT all -- anywhere anywhere /* default/echo -> 10.42.0.10:8080 */ statistic mode random probability 0.33333333349

KUBE-SEP-H5H4EIE4RL3K23M2 all -- anywhere anywhere /* default/echo -> 10.42.0.11:8080 */ statistic mode random probability 0.50000000000

KUBE-SEP-E74O23K3CEWRBTQR all -- anywhere anywhere /* default/echo -> 10.42.0.9:8080 */

[ec2-user@ip-172-31-42-23 k3s]$ sudo iptables -t nat -L KUBE-SEP-QKHRHCLFVYAPNTMT

Chain KUBE-SEP-QKHRHCLFVYAPNTMT (1 references)

target prot opt source destination

KUBE-MARK-MASQ all -- ip-10-42-0-10.ap-southeast-1.compute.internal anywhere /* default/echo */

DNAT tcp -- anywhere anywhere /* default/echo */ tcp to:10.42.0.10:8080

从PREROUTING和OUTPUT的链中我们可以看到,所有进出Pod的数据包都进入了KUBE-SERVICES链作为起始点,在这种情况下,所有进入服务echo(匹配目标IP 10.43.91.128和端口6711的流量)的入站流量都将按照顺序由两条规则进行处理

- 当数据包的源IP不来自pod时,在经过KUBE-MARK-MASQ链时,源IP将被替换为节点IP

- 然后,数据包流入到KUBE-SVC-U52O5CQH2XXNVZ54链中

从下面我们可以看到,当从外部访问服务(而不是从pod)时,源IP被替换为节点IP:

172.31.42.23 是node ip 10.43.206.140 extra service ip

[ec2-user@ip-172-31-42-23 ~]$ curl --interface 172.31.42.23 -s 10.43.206.140:8711 | grep client

client_address=10.42.0.1

[ec2-user@ip-172-31-42-23 ~]$ sudo conntrack -L -d 10.43.206.140

tcp 6 110 TIME_WAIT src=172.31.42.23 dst=10.43.206.140 sport=35582 dport=8711 src=10.42.0.11 dst=10.42.0.1 sport=8080 dport=16315 [ASSURED] mark=0 secctx=system_u:object_r:unlabeled_t:s0 use=1

conntrack v1.4.6 (conntrack-tools): 1 flow entries have been shown.

debug pod里可以这样执行:

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl run -it --rm --restart=Never debug --image=nicolaka/netshoot:latest sh

If you don't see a command prompt, try pressing enter.

~ # curl -s 10.43.206.140:8711|grep client

client_address=10.42.0.13

👆10.42.0.13时debug pod ip,当数据包来自内部的pod(即'debug' pod)时,源IP保持不变

- KUBE-SVC-*

👆KUBE-SVC-HV6DMF63W6MGLRDE 链中,pod之间的负载均衡是通过iptables模块 'statistic' 完成的。它使用 'probability' 设置将数据包随机分发到KUBE-SEP-*

-

KUBE-SEP-*

每个KUBE-SEP-*链分别代表一个pod或端点。它包括两个操作:

- 从pod流出的数据包被源NAT为主机的docker0 IP

- 进入pod的数据包被DNAT为pod的IP,然后路由到后端pod

session affinity service

yaml 定义:

apiVersion: v1

kind: Service

metadata:

name: echo-session

spec:

sessionAffinity: ClientIP

ports:

- port: 6711

targetPort: 8080

selector:

app: echo

查询

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get svc echo-session

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-session ClusterIP 10.43.16.138 <none> 6711/TCP 32s

[ec2-user@ip-172-31-42-23 ~]$

[ec2-user@ip-172-31-42-23 ~]$

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get ep echo-session

NAME ENDPOINTS AGE

echo-session 10.42.0.10:8080,10.42.0.11:8080,10.42.0.9:8080 42s

“normal” ClusterIP 和具有会话亲和性的 ClusterIP 之间的区别在于,在第一次分发进入服务的请求时,kube-proxy 会利用 ‘statistic’ iptables 模块,同时对于非首次访问者,kube-proxy 也会应用 ‘recent’ 模块,超时检查时间默认为10800秒

可以通过 service.spec.sessionAffinityConfig.clientIP.timeoutSeconds 来重新设置

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-SERVICES | grep echo-session

KUBE-SVC-ZACZRH37NSSVE74M tcp -- anywhere ip-10-43-16-138.ap-southeast-1.compute.internal /* default/echo-session cluster IP */ tcp dpt:6711

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-SVC-ZACZRH37NSSVE74M

Chain KUBE-SVC-ZACZRH37NSSVE74M (1 references)

target prot opt source destination

KUBE-MARK-MASQ tcp -- !ip-10-42-0-0.ap-southeast-1.compute.internal/16 ip-10-43-16-138.ap-southeast-1.compute.internal /* default/echo-session cluster IP */ tcp dpt:6711

KUBE-SEP-5S4SOT7OIG3HMTHM all -- anywhere anywhere /* default/echo-session -> 10.42.0.10:8080 */ recent: CHECK seconds: 10800 reap name: KUBE-SEP-5S4SOT7OIG3HMTHM side: source mask: 255.255.255.255

KUBE-SEP-RBDR2NWVDKJFF37X all -- anywhere anywhere /* default/echo-session -> 10.42.0.11:8080 */ recent: CHECK seconds: 10800 reap name: KUBE-SEP-RBDR2NWVDKJFF37X side: source mask: 255.255.255.255

KUBE-SEP-ZYFM7UQEEXHOQCCO all -- anywhere anywhere /* default/echo-session -> 10.42.0.9:8080 */ recent: CHECK seconds: 10800 reap name: KUBE-SEP-ZYFM7UQEEXHOQCCO side: source mask: 255.255.255.255

KUBE-SEP-5S4SOT7OIG3HMTHM all -- anywhere anywhere /* default/echo-session -> 10.42.0.10:8080 */ statistic mode random probability 0.33333333349

KUBE-SEP-RBDR2NWVDKJFF37X all -- anywhere anywhere /* default/echo-session -> 10.42.0.11:8080 */ statistic mode random probability 0.50000000000

KUBE-SEP-ZYFM7UQEEXHOQCCO all -- anywhere anywhere /* default/echo-session -> 10.42.0.9:8080 */

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-SEP-5S4SOT7OIG3HMTHM

Chain KUBE-SEP-5S4SOT7OIG3HMTHM (2 references)

target prot opt source destination

KUBE-MARK-MASQ all -- ip-10-42-0-10.ap-southeast-1.compute.internal anywhere /* default/echo-session */

DNAT tcp -- anywhere anywhere /* default/echo-session */ recent: SET name: KUBE-SEP-5S4SOT7OIG3HMTHM side: source mask: 255.255.255.255 tcp to:10.42.0.10:8080

external ip service

如果存在将流量路由到一个或多个集群节点的外部IP,Kubernetes服务可以在这些externalIPs上公开。流入集群的流量,以外部IP(作为目标IP)和服务端口为目的地,将被路由到服务端点之一

yaml 定义:

apiVersion: v1

kind: Service

metadata:

name: echo-extip

spec:

ports:

- port: 8711

targetPort: 8080

selector:

app: echo

externalIPs:

- 172.0.11.33

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get svc echo-extip

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-extip ClusterIP 10.43.206.140 172.31.42.23 8711/TCP 4h2m

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get ep echo-extip

NAME ENDPOINTS AGE

echo-extip 10.42.0.10:8080,10.42.0.11:8080,10.42.0.9:8080 4h2m

iptable rules:

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-SERVICES | grep echo-extip

KUBE-SVC-VICMHRUMYETMHGPR tcp -- anywhere ip-10-43-206-140.ap-southeast-1.compute.internal /* default/echo-extip cluster IP */ tcp dpt:nvc

KUBE-EXT-VICMHRUMYETMHGPR tcp -- anywhere ip-172-31-42-23.ap-southeast-1.compute.internal /* default/echo-extip external IP */ tcp dpt:nvc

no endpoint service

Cluster IP 与后端pod通过选择器进行关联。

如果没有pod与服务的选择器匹配,该服务将被创建而没有端点引用它,在这种情况下,将不会为路由流量创建任何iptables规则

yaml定义:

apiVersion: v1

kind: Service

metadata:

name: echo-noep

spec:

ports:

- port: 8711

targetPort: 8080

selector:

app: echo-noep

sudo iptables -t nat -L KUBE-SERVICES | grep echo-noep 没有任何记录,没有endpoint

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get svc echo-noep

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-noep ClusterIP 10.43.136.207 <none> 8711/TCP 11s

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get ep echo-noep

NAME ENDPOINTS AGE

echo-noep <none> 19s

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables-save -t nat | grep echo-noep

[ec2-user@ip-172-31-42-23 ~]$

Service 如果没有定义selector, 同样是没有endpoints

headless service

有时候你不需要负载均衡和一个单一的服务IP。在这种情况下,你可以创建所谓的“无头”服务,通过明确地为 cluster IP(.spec.clusterIP)指定“None”

yaml定义:

apiVersion: v1

kind: Service

metadata:

name: echo-headless

spec:

clusterIP: None

ports:

- port: 9711

targetPort: 8080

selector:

app: echo

进入debug pod,dig echo-headless +search +short

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl run -it --rm --restart=Never debug --image=nicolaka/netshoot:latest sh

If you don't see a command prompt, try pressing enter.

~ # dig echo-headless +search +short

10.42.0.11

10.42.0.9

10.42.0.10

同时,sudo iptables -t nat -L KUBE-SERVICES | grep echo-headless

没有任何规则

NodePort

Kubernetes通过部署NodePort类型的服务以nodeIP:nodePort的方式公开服务。通过这样做,Kubernetes创建了一个ClusterIP服务,NodePort服务将路由到该服务,并同时在所有节点上打开一个特定的端口(节点端口),发送到该端口的任何流量都将转发到目标pod

执行NodePort流量的iptables规则的入口点是 -A KUBE-SERVICES

KUBE-NODEPORTS规则是KUBE-SERVICES链中的最后一条规则,它表示如果访问的数据包不匹配前面的任何规则,则将其转发到KUBE-NODEPORTS链进行进一步处理

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-SERVICES

Chain KUBE-SERVICES (2 references)

target prot opt source destination

KUBE-SVC-NPX46M4PTMTKRN6Y tcp -- anywhere ip-10-43-0-1.ap-southeast-1.compute.internal /* default/kubernetes:https cluster IP */ tcp dpt:https

KUBE-SVC-TCOU7JCQXEZGVUNU udp -- anywhere ip-10-43-0-10.ap-southeast-1.compute.internal /* kube-system/kube-dns:dns cluster IP */ udp dpt:domain

KUBE-SVC-ERIFXISQEP7F7OF4 tcp -- anywhere ip-10-43-0-10.ap-southeast-1.compute.internal /* kube-system/kube-dns:dns-tcp cluster IP */ tcp dpt:domain

KUBE-SVC-XS3QDOAWI6R6C3NX tcp -- anywhere ip-10-43-209-114.ap-southeast-1.compute.internal /* default/echo-np cluster IP */ tcp dpt:nvc

KUBE-SVC-ZACZRH37NSSVE74M tcp -- anywhere ip-10-43-16-138.ap-southeast-1.compute.internal /* default/echo-session cluster IP */ tcp dpt:6711

KUBE-SVC-JD5MR3NA4I4DYORP tcp -- anywhere ip-10-43-0-10.ap-southeast-1.compute.internal /* kube-system/kube-dns:metrics cluster IP */ tcp dpt:9153

KUBE-SVC-Z4ANX4WAEWEBLCTM tcp -- anywhere ip-10-43-214-178.ap-southeast-1.compute.internal /* kube-system/metrics-server:https cluster IP */ tcp dpt:https

KUBE-SVC-UQMCRMJZLI3FTLDP tcp -- anywhere ip-10-43-24-120.ap-southeast-1.compute.internal /* kube-system/traefik:web cluster IP */ tcp dpt:http

KUBE-EXT-UQMCRMJZLI3FTLDP tcp -- anywhere ip-172-31-42-23.ap-southeast-1.compute.internal /* kube-system/traefik:web loadbalancer IP */ tcp dpt:http

KUBE-SVC-CVG3OEGEH7H5P3HQ tcp -- anywhere ip-10-43-24-120.ap-southeast-1.compute.internal /* kube-system/traefik:websecure cluster IP */ tcp dpt:https

KUBE-EXT-CVG3OEGEH7H5P3HQ tcp -- anywhere ip-172-31-42-23.ap-southeast-1.compute.internal /* kube-system/traefik:websecure loadbalancer IP */ tcp dpt:https

KUBE-NODEPORTS all -- anywhere anywhere /* kubernetes service nodeports; NOTE: this must be the last rule in this chain */ ADDRTYPE match dst-type LOCAL

NodePort service有两种类型

- default service(externalTrafficPolicy: Cluster)

- externalTrafficPolicy: Local

externalTrafficPolicy:Cluster 模式

apiVersion: v1

kind: Service

metadata:

name: echo-np

spec:

type: NodePort

ports:

- port: 8711

targetPort: 8080

selector:

app: echo

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get svc echo-np

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-np NodePort 10.43.209.114 <none> 8711:31529/TCP 10s

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get ep echo-np

NAME ENDPOINTS AGE

echo-np 10.42.0.10:8080,10.42.0.11:8080,10.42.0.9:8080 19s

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-SERVICES | grep echo-np

KUBE-SVC-XS3QDOAWI6R6C3NX tcp -- anywhere ip-10-43-209-114.ap-southeast-1.compute.internal /* default/echo-np cluster IP */ tcp dpt:nvc

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-NODEPORTS

Chain KUBE-NODEPORTS (1 references)

target prot opt source destination

KUBE-EXT-XS3QDOAWI6R6C3NX tcp -- anywhere anywhere /* default/echo-np */ tcp dpt:31529

KUBE-EXT-UQMCRMJZLI3FTLDP tcp -- anywhere anywhere /* kube-system/traefik:web */ tcp dpt:30784

KUBE-EXT-CVG3OEGEH7H5P3HQ tcp -- anywhere anywhere /* kube-system/traefik:websecure */ tcp dpt:32646

externalTrafficPolicy: Local 模式

yaml定义

apiVersion: v1

kind: Service

metadata:

name: echo-local

spec:

ports:

- port: 8711

targetPort: 8080

selector:

app: echo

type: NodePort

externalTrafficPolicy: Local

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get svc echo-local

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

echo-local NodePort 10.43.77.106 <none> 8711:31534/TCP 9s

[ec2-user@ip-172-31-42-23 ~]$ sudo kubectl get ep echo-local

NAME ENDPOINTS AGE

echo-local 10.42.0.10:8080,10.42.0.11:8080,10.42.0.9:8080 16s

KUBE-NODEPORTS链在节点具有本地端点和没有本地端点的情况下是不同的。假设“echo” pod未调度到节点上,数据包将被KUBE-MARK-DROP”丢弃。相反,节点上有对应的pod, KUBE-EXT-TTO3CLWIB2CZNJXW 将负责路由到下个chain

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-NODEPORTS

Chain KUBE-NODEPORTS (1 references)

target prot opt source destination

KUBE-EXT-TTO3CLWIB2CZNJXW tcp -- anywhere anywhere /* default/echo-local */ tcp dpt:31534

KUBE-EXT-UQMCRMJZLI3FTLDP tcp -- anywhere anywhere /* kube-system/traefik:web */ tcp dpt:30784

KUBE-EXT-CVG3OEGEH7H5P3HQ tcp -- anywhere anywhere /* kube-system/traefik:websecure */ tcp dpt:32646

[ec2-user@ip-172-31-42-23 ~]$ sudo iptables -t nat -L KUBE-EXT-TTO3CLWIB2CZNJXW

Chain KUBE-EXT-TTO3CLWIB2CZNJXW (1 references)

target prot opt source destination

KUBE-SVC-TTO3CLWIB2CZNJXW all -- ip-10-42-0-0.ap-southeast-1.compute.internal/16 anywhere /* pod traffic for default/echo-local external destinations */

KUBE-MARK-MASQ all -- anywhere anywhere /* masquerade LOCAL traffic for default/echo-local external destinations */ ADDRTYPE match src-type LOCAL

KUBE-SVC-TTO3CLWIB2CZNJXW all -- anywhere anywhere /* route LOCAL traffic for default/echo-local external destinations */ ADDRTYPE match src-type LOCAL

KUBE-SVL-TTO3CLWIB2CZNJXW all -- anywhere anywhere